As an Excel user, I’m always looking for ways to automate repetitive tasks and make my workflow more efficient. That’s where Office Scripts come in. The way office scripts are advertised out of the box is that they’re a feature in Excel that enables you to automate tasks by recording your actions and replay them whenever I want. you can create scripts using the Action Recorder, which is great for consistent actions.

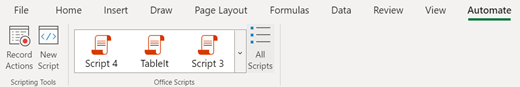

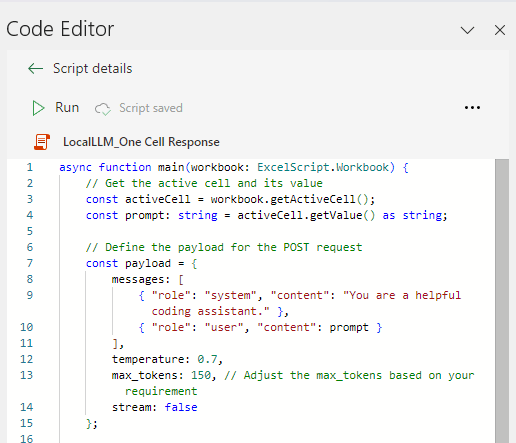

But what if you want something more? Office scripts can handle that too, as the underlying technology that drives your office scripts is Typescript, which you can use the Code Editor to build out more advanced scripts. In this post, we’ll take a closer look at how I used the Code Editor to build an Office Script that integrates with a local language model (LLM) using a REST API call.

Not Every Problem Can Be Solved with Excel.. Or Can They?

I had a dataset with hundreds of rows that required me to make decisions based on multiple pieces of information. The problem was that these decisions couldn’t be made using Excel functions alone. While you can build out complex formulas to work with text, such as using the ISNUMBER(SEARCH(x)) function and other methods, they’re very basic and not suitable for my task.

I knew I needed a more advanced solution, one that could provide a level of intelligence to the process. That’s when I turned to language models (LLMs). As I discussed in my previous post about self-hosting open source langauge models, LLMs are not to be confused with artificial general intelligence (AGI). Instead, they’re designed to predict the next word in a sequence based on their training data. This gives the impression that they’re “thinking” when they provide responses, especially when they get it spot on. However, as anyone who’s worked with LLMs knows, they can also produce “hallucinations” – responses that are incorrect or nonsensical.

To benefit the power of LLMs in my Excel project, I needed to provide context and guidance to ensure accurate responses. I realized that by concatenating relevant information from other cells using basic Excel formulas, I could create a prompt that would help the LLM provide a more accurate answer. It was a simple yet effective approach: “Can you tell me the best match for x, pick the answer from the list of y?”

With this approach in mind, I set out to integrate the LLM into my Excel workflow using Office Scripts. The basic steps to my code is:

- Self-host a language model using LM Studio (how do I do that?)

- Get the current selected cell

- Send the text to the local language model as a prompt

- Return the result to the cell in the next column

async function main(workbook: ExcelScript.Workbook) {

// Get the active cell and its value

const activeCell = workbook.getActiveCell();

const prompt: string = activeCell.getValue() as string;

// Define the payload for the POST request

const payload = {

messages: [

{ "role": "system", "content": "You are a helpful coding assistant." },

{ "role": "user", "content": prompt }

],

temperature: 0.7, // Adjust the temperature based on your requirement

max_tokens: 150, // Adjust the max_tokens based on your requirement

stream: false

};

const uri: string = 'http://localhost:1234/v1/chat/completions'; // LM Studio server URL

try {

// Make a call to the local LLM using the payload

const chatCompletionResponse: Response = await fetch(uri, {

method: "POST",

headers: {

"Content-Type": "application/json"

},

body: JSON.stringify(payload)

});

if (!chatCompletionResponse.ok) {

throw new Error(`Server error: ${chatCompletionResponse.statusText}`);

}

// Define the expected response structure

interface ChatCompletionResponse {

choices: { message: { content: string } }[];

}

const chatCompletionData: ChatCompletionResponse = await chatCompletionResponse.json();

// Extract the response content

const responseContent: string = chatCompletionData.choices[0].message.content;

// Place the response in the adjacent cell

const nextCell = activeCell.getOffsetRange(0, 1);

nextCell.setValue(responseContent);

} catch (error: unknown) {

console.log("Error making request:", error);

}

}Code language: TypeScript (typescript)Here is an example of how you might use this code.

In column A, I have a list of yellow things. In column B, I have something I want to ask about in row 2, then in row 6, I’ve built out my LLM prompt with the following Excel formula:

="From the following list: "&ARRAYTOTEXT(A1:A25,0)&". Which item best describes "&B2Code language: JavaScript (javascript)With a slight modification to the code above, I’m sending my answer to cell B8.

As you can see, my question to the LLM becomes:

From the following list: Sunflowers, Bananas, Lemons, Daffodils, Corn, Yellow peppers, Bumblebees, Rubber ducks, Pineapples, School buses, Marigolds, Canaries, Yellow jackets, Yellow tulips, Goldfinches, Cheese, Mustard, Yellow apples, Yellow squash, Yellow traffic lights, Yellow highlighters, Yellow post-it notes, Yellow balloons, Sweetcorn, Dandelions. Which item best describes a fruit often associated with pyjamas

And the response is:

The item that best fits the description of being “a fruit often associated with pyjamas” would likely be bananas due to their yellow color and common presence in comfort-oriented clothing like pajama sets, which are frequently adorned with whimsical designs including fruits. While there might not always be an explicit connection between the two items (bananas and pyjamas), it’s a playful association that fits within cultural references to bananas as part of bedroom furnishings or thematic decorations for children’s nightwear.

The response is far longer than we’d like it to be, so how do we fix that? When working with LLM API calls, the easiest ways to adjust the responses are to adjust the temperature and the token limit and then review either your prompt or the system role.

Think of tokens as words, or parts of words, and the temperature as verbosity from a range of 0 – 1, with 1 being more verbose and 0 being more concise.

After changing the temperature to 0.2 and the token limit to 20 and with no changes to the prompt, the answer is far less useful but its certainly shorter:

The term “fruit” is typically used to describe the edible part of plants that usually

The next steps are to adjust the system role, the prompt, or both, however sometimes, this can result in things getting even worse. For example, using

const payload = {

messages: [

{ "role": "system", "content": "You are a helpful object classification assistant" },

{ "role": "user", "content": From the following list: Sunflowers, Bananas, Lemons, Daffodils, Corn, Yellow peppers, Bumblebees, Rubber ducks, Pineapples, School buses, Marigolds, Canaries, Yellow jackets, Yellow tulips, Goldfinches, Cheese, Mustard, Yellow apples, Yellow squash, Yellow traffic lights, Yellow highlighters, Yellow post-it notes, Yellow balloons, Sweetcorn, Dandelions. Which item best describes a fruit often associated with pyjamas? Respond in 3 words or less

}

],

temperature: 0.2,

max_tokens: 20, // Adjust the max_tokens based on your requirement

stream: false

};Code language: JavaScript (javascript)We end up with this:

“Yellow peppers” (though not typically worn as clothing) are the closest match

While we’re certainly making the responses shorter, the core part of the response is completely wrong. When you’re stuck getting silly errors and non-sensical results, this is when you need to start thinking about the model you’re using.

For each of these examples, I’ve been using Microsoft’s open-source Phi 3 mini instruct model, which is usually quite good when dealing with larger datasets, but it looks like it’s not that great for object classification tasks. But that’s the thing about hosting local LLMs, you can pick and choose what works best for you within the constraints of your hardware. There are so many variations of open source LLMs out there that are suited to particular tasks, or where others have tuned them to perform specific uses. You can even take one as a base and fine-tune it based on your own datasets.

With no change other than changing to Meta’s Llama 3 Instruct model, running the script again, things are looking much better!

Bananas are best!

In this situation, Llama 3 is clearly much better at following the instructions provided, we asked it to respond in 3 words or less, and it did exactly that, and what’s more, it was correct. That just leaves us to make a minor amendment to our prompt, instead of asking the LLM to response “in 3 words or less”, we now ask it to “Respond with only the correct answer from the list” and finally we get the response we want:

Bananas

To be clear, this isn’t an exercise in saying that Llama 3 is better than Phi 3, but moreso to demonstrate that there is more than just your prompt and context required to get the desired results. I personally use both Llama 3 and Phi 3 (and many others) quite often depending on the task at hand.

Can’t Self Host an LLM? No Worries!

If you’re unable to self-host an LLM on your machine, you’ve not nothing to worry about. Many of the local and cloud hosted open source LLM tools API is the same as the OpenAI API, so it doesn’t matter if you’re using OpenAI, VLLM, LM Studio, Ollama or many others, this same code should work for you. The only difference being that you would need to change the URI used in the code, and for cloud hosted services, you would need to include an API key.

Office Scripts – Not Just for Excel

One of the coolest things about Office Scripts is that they’re not limited to a single Excel file. Once you’ve saved a script, it’s automatically loaded into Excel every time you open it, as long as you’re connected to your Office 365 account or OneDrive. This means you can access your scripts from any device, without having to recreate them.

But that’s not all – Office Scripts can also be integrated with Power Automate, allowing you to build complex flows that call scripts from your library. For example, I’ve built a regex script that I can call from Power Automate, which fills a gap in Power Automates native functionality. This opens up a whole new world of possibilities for automating tasks and workflows.

No Comments